|

Condor-G is a ``window to the grid.'' The function of Condor-G becomes clear with a brief overview of the software that forms a Condor pool. For this discussion, the software of a Condor pool is divided into two parts. The first part does job management. It keeps track of a user's jobs. You can ask the job management part of Condor to show you the job queue, to submit new jobs to the system, to put jobs on hold, and to request information about jobs that have completed. The other part of the Condor software does resource management. It keeps track of which machines are available to run jobs, how the available machines should be utilized given all the users who want to run jobs on them, and when a machine is no longer available. works with grid resources, allowing users to effectively submit jobs, manage jobs, and have jobs execute on widely distributed machines.

A machine with the job management part installed is called a submit machine. A machine with the resource management part installed is called an execute machine. Each machine may have one part or both. Condor-G is the job management part of Condor. Condor-G lets you submit jobs into a queue, have a log detailing the life cycle of your jobs, manage your input and output files, along with everything else you expect from a job queuing system.

Condor uses Globus to provide underlying software needed to utilize grid resources, such as authentication, remote program execution and data transfer. Condor's capabilities when executing jobs on Globus resources have significantly increased. The same Condor tools that access local resources are now able to use the Globus protocols to access resources at multiple sites.

Condor-G is a program that manages both a queue of jobs and the resources from one or more sites where those jobs can execute. It communicates with these resources and transfers files to and from these resources using Globus mechanisms. (In particular, Condor-G uses the GRAM protocol for job submission, and it runs a local GASS server for file transfers).

It may appear that Condor-G is a simple replacement for the Globus toolkit's globusrun command. However, Condor-G does much more. It allows you to submit many jobs at once and then to monitor those jobs with a convenient interface, receive notification when jobs complete or fail, and maintain your Globus credentials which may expire while a job is running. On top of this, Condor-G is a fault-tolerant system; if your machine crashes, you can still perform all of these functions when your machine returns to life.

NOTE: This version of the manual has correct installation instructions for Condor-G Version 6.6.0, but other usage information has not yet been updated.

The Globus software provides a well-defined set of protocols that allow authentication, data transfer, and remote job submission.

Authentication is a mechanism by which an identity is verified. Given proper authentication, authorization to use a resource is required. Authorization is a policy that determines who is allowed to do what.

Condor-G allows the user to treat the Grid as a local resource, and the same command-line tools perform basic job management such as:

These are features that Condor has provided for many years. Condor-G extends this to the grid, providing resource management while still providing fault tolerance and exactly-once execution semantics.

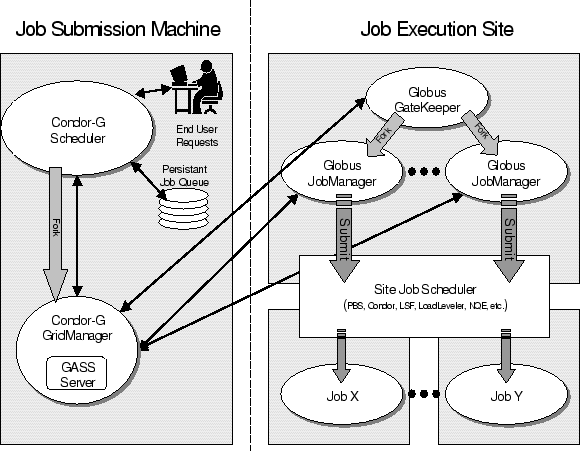

Figure 5.1 shows how Condor-G interacts with Globus protocols. Condor-G contains a GASS server, used to transfer the executable, stdin, stdout, and stderr to and from the remote job execution site. Condor-G uses the GRAM protocol to contact the remote Globus Gatekeeper and request that a new job manager be started. GRAM is also used to monitor the job's progress. Condor-G detects and intelligently handles cases such as if the remote Globus resource crashes.

![[*]](crossref.png) )

there are two steps necessary before a globus universe job

can be submitted:

)

there are two steps necessary before a globus universe job

can be submitted:

GRIDMANAGER = $(SBIN)/condor_gridmanager GAHP = $(SBIN)/gahp_server MAX_GRIDMANAGER_LOG = 64000 GRIDMANAGER_DEBUG = D_COMMAND GRIDMANAGER_LOG = $(LOG)/GridLogs/GridmanagerLog.$(USERNAME) GLIDEIN_SERVER_NAME = gridftp.cs.wisc.edu GLIDEIN_SERVER_DIR = /p/condor/public/binaries/glidein

If Condor-G is installed as root, the file

set by the configuration variable

GRIDMANAGER_LOG must have world-write permission.

All of the parent directories for this file must

also have world-execute permission.

The Gridmanager runs as the user who submitted the job,

so the Gridmanager may not be able to write to the ordinary

$(log) directory.

Use of the definition of GRIDMANAGER_LOG

shown above will likely require the creation of

the directory $(LOG)/GridLogs.

Permissions on this directory should be set

by running chmod using the value 1777.

Another option is to locate the Gridmanager log files somewhere else, like so:

GRIDMANAGER_LOG = /tmp/GridmanagerLog.$(USERNAME)

If you make any changes to the configuration file while Condor is running, you will need to issue a condor_ reconfigure command.

Two additional configuration variables may be useful, in the case that a user submits many jobs at one time. The configuration variable GRIDMANAGER_MAX_SUBMITTED_JOBS_PER_RESOURCE limits the number of jobs that a condor_ gridmanager daemon will submit to a resource. It is useful for controlling the number of jobmanager processes running on the front-end node of a cluster. As an example, consider the case where GRIDMANAGER_MAX_SUBMITTED_JOBS_PER_RESOURCE is set to 100. A user submits 1000 jobs to Condor-G, all intended to run on the same resource. Condor-G will start by only submitting 100 jobs to that resource. As each submitted job completes, Condor-G will submit another job from the remaining 900. Without the throttle provided by this configuration variable, Condor-G would submit all 1000 jobs together, and the front-end node of the resource would be brought to its knees by the corresponding 1000 Globus jobmanager processes.

A second useful configuration variable is GRIDMANAGER_MAX_PENDING_SUBMITS_PER_RESOURCE , the maximum number of jobs that can be in the process of being submitted at any time (that is, how many globus_gram_client_job_request calls are pending). It is useful for controlling the number of new connections/processes created at a given time. It exists because of a problem when Condor-G tried to submit 100 jobs at one time, and inetd on the remote host thought it was the start of a denial-of-service attack (and therefore disabled the gatekeeper service for a short period).

GRIDMANAGER_MAX_SUBMITTED_JOBS_PER_RESOURCE limits the total number of jobs that Condor-G will have submitted to a remote resource at any given time. GRIDMANAGER_MAX_PENDING_SUBMITS_PER_RESOURCE limits how fast Condor-G will submit jobs to a remote resource. As an example, the configuration

GRIDMANAGER_MAX_SUBMITTED_JOBS_PER_RESOURCE=100 GRIDMANAGER_MAX_PENDING_SUBMITS_PER_RESOURCE=20will have Condor-G submit jobs in sets of 20 until a total of 100 have been submitted.

See section 3.3 on

page ![[*]](crossref.png) for

more information about configuration file entries.

See section 3.3.18 on

page

for

more information about configuration file entries.

See section 3.3.18 on

page ![[*]](crossref.png) for information

about configuration file entries specific to the Condor-G

gridmanager.

for information

about configuration file entries specific to the Condor-G

gridmanager.

Condor-G periodically checks for an updated proxy at an interval given by the configuration variable GRIDMANAGER_CHECKPROXY_INTERVAL. The value is defined in terms of seconds. For example, if you create a 12-hour proxy, and then 6 hours later re-run grid-proxy-init, Condor-G will check the proxy within this time interval, and use the new proxy it finds there. The default interval is 10 minutes.

Condor-G also knows when the proxy of each job will expire, and if the proxy is not refreshed before GRIDMANAGER_MINIMUM_PROXY_TIME seconds before the proxy expires, the Condor-G grid manager daemon exits. Since the grid manager daemon keeps track of all jobs associated with a proxy, its tasks (such as authentication, file transfer, job log maintenance) will not occur. So, if GRIDMANAGER_MINIMUM_PROXY_TIME is 180, and the proxy is 3 minutes away from expiring, Condor-G will attempt to safely shut down, instead of simply losing contact with the remote job because Condor-G is unable to authenticate the remote job. The default setting is 3 minutes (180 seconds).

This section contains what users need to know to run and manage jobs under the globus universe.

Under Condor, successful job submission to the Globus universe requires credentials. An X.509 certificate is used to create a proxy, and an account, authorization, or allocation to use a grid resource is required. For more information on proxies and certificates, please consult the Alliance PKI pages at

http://archive.ncsa.uiuc.edu/SCD/Alliance/GridSecurity/

Before submitting a job to Condor under the Globus universe, make sure you have your Grid credentials and have used grid-proxy-init to create a proxy.

A job is submitted for execution to Condor using the condor_ submit command. condor_ submit takes as an argument the name of a file called a submit description file. The following sample submit description file runs a job on the Origin2000 at NCSA.

executable = test globusscheduler = modi4.ncsa.uiuc.edu/jobmanager universe = globus output = test.out log = test.log queue

The executable for this example is transferred from the local machine to the remote machine. By default, Condor transfers the executable, as well as any files specified by the input command. Note that this executable must be compiled for the correct intended platform.

The globusscheduler command is dependent on the scheduling software available on remote resource. This required command will change based on the Grid resource intended for execution of the job. A jobmanager is the Globus service that is spawned at a remote site to submit, keep track of, and manage Grid I/O for jobs running on the local batch system there. There is a specific jobmanager for each type of batch system supported by Globus (examples are Condor, LSF, and PBS).

All Condor-G jobs (intended for execution on Globus-controlled

resources) are submitted to the globus universe.

The universe = globus command is required

in the submit description file.

No input file is specified for this example job. Any output (file specified by the output) or error (file specified by the error) is transferred from the remote machine to the local machine as it is produced. This implies that these files may be incomplete in the case where the executable does not finish running on the remote resource. The job log file is maintained on the local machine.

To submit this job to Condor-G for execution on the remote machine, use

condor_submit test.submitwhere test.submit is the name of the submit description file.

Example output from condor_ q for this submission looks like:

% condor_q -- Submitter: wireless48.cs.wisc.edu : <128.105.48.148:33012> : wireless48.cs.wi ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD 7.0 epaulson 3/26 14:08 0+00:00:00 I 0 0.0 test 1 jobs; 1 idle, 0 running, 0 held

After a short time, Globus accepts the job. Again running condor_ q will now result in

% condor_q -- Submitter: wireless48.cs.wisc.edu : <128.105.48.148:33012> : wireless48.cs.wi ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD 7.0 epaulson 3/26 14:08 0+00:01:15 R 0 0.0 test 1 jobs; 0 idle, 1 running, 0 held

Then, very shortly after that, the queue will be empty again, because the job has finished:

% condor_q -- Submitter: wireless48.cs.wisc.edu : <128.105.48.148:33012> : wireless48.cs.wi ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD 0 jobs; 0 idle, 0 running, 0 held

A second example of a submit description file runs the Unix ls program on a different Globus resource.

executable = /bin/ls Transfer_Executable = false globusscheduler = vulture.cs.wisc.edu/jobmanager universe = globus output = ls-test.out log = ls-test.log queue

In this example, the executable (the binary) has been pre-staged. The executable is on the remote machine, and it is not to be transferred before execution. Note that the required globusscheduler and universe commands are present. The command

Transfer_Executable = FALSEwithin the submit description file identifies the executable as being pre-staged. In this case, the executable command gives the path to the executable on the remote machine.

A third example submits a Perl script to be run as a submitted Condor job. The Perl script both lists and sets environment variables for a job. Save the following Perl script with the name env-test.pl, to be used as a Condor job executable.

#!/usr/bin/env perl

foreach $key (sort keys(%ENV))

{

print "$key = $ENV{$key}\n"

}

exit 0;

Run the Unix command

chmod 755 env-test.plto make the Perl script executable.

Now create the following submit description file (Replace biron.cs.wisc.edu/jobmanager with a resource you are authorized to use.):

executable = env-test.pl globusscheduler = biron.cs.wisc.edu/jobmanager universe = globus environment = foo=bar; zot=qux output = env-test.out log = env-test.log queue

When the job has completed, the output file env-test.out should contain something like this:

GLOBUS_GRAM_JOB_CONTACT = https://biron.cs.wisc.edu:36213/30905/1020633947/ GLOBUS_GRAM_MYJOB_CONTACT = URLx-nexus://biron.cs.wisc.edu:36214 GLOBUS_LOCATION = /usr/local/globus GLOBUS_REMOTE_IO_URL = /home/epaulson/.globus/.gass_cache/globus_gass_cache_1020633948 HOME = /home/epaulson LANG = en_US LOGNAME = epaulson X509_USER_PROXY = /home/epaulson/.globus/.gass_cache/globus_gass_cache_1020633951 foo = bar zot = qux

Of particular interest is the GLOBUS_REMOTE_IO_URL environment variable. Condor-G automatically starts up a GASS remote I/O server on the submitting machine. Because of the potential for either side of the connection to fail, the URL for the server cannot be passed directly to the job. Instead, it is put into a file, and the GLOBUS_REMOTE_IO_URL environment variable points to this file. Remote jobs can read this file and use the URL it contains to access the remote GASS server running inside Condor-G. If the location of the GASS server changes (for example, if Condor-G restarts), Condor-G will contact the Globus gatekeeper and update this file on the machine where the job is running. It is therefore important that all accesses to the remote GASS server check this file for the latest location.

The following example is a Perl script that uses the GASS server in Condor-G to copy input files to the execute machine. In this example, the remote job counts the number of lines in a file.

#!/usr/bin/env perl

use FileHandle;

use Cwd;

STDOUT->autoflush();

$gassUrl = `cat $ENV{GLOBUS_REMOTE_IO_URL}`;

chomp $gassUrl;

$ENV{LD_LIBRARY_PATH} = $ENV{GLOBUS_LOCATION}. "/lib";

$urlCopy = $ENV{GLOBUS_LOCATION}."/bin/globus-url-copy";

# globus-url-copy needs a full pathname

$pwd = getcwd();

print "$urlCopy $gassUrl/etc/hosts file://$pwd/temporary.hosts\n\n";

`$urlCopy $gassUrl/etc/hosts file://$pwd/temporary.hosts`;

open(file, "temporary.hosts");

while(<file>) {

print $_;

}

exit 0;

The submit description file used to submit the Perl script as a Condor job appears as:

executable = gass-example.pl globusscheduler = biron.cs.wisc.edu/jobmanager universe = globus output = gass.out log = gass.log queue

There are two optional submit description file commands of note: x509userproxy and globusrsl. The x509userproxy command specifies the path to an X.509 proxy. The command is of the form:

x509userproxy = /path/to/proxyIf this optional command is not present in the submit description file, then Condor-G checks the value of the environment variable X509_USER_PROXY for the location of the proxy. If this environment variable is not present, then Condor-G looks for the proxy in the file /tmp/x509up_u0000, where the trailing zeros in this file name are replaced with the Unix user id.

The globusrsl command is used to add additional attribute settings to a job's RSL string. The format of the globusrsl command is

globusrsl = (name=value)(name=value)Here is an example of this command from a submit description file:

globusrsl = (project=Test_Project)This example's attribute name for the additional RSL is

project, and the value assigned is Test_Project.

Condor requires space characters to delimit the arguments in an submit description file such as

arguments = 13 argument2 argument3This example results in the 3 arguments:

argv[1] = 13 argv[2] = argument2 argv[3] = argument3

With Condor-G, arguments are passed through using RSL. Therefore, arguments are parsed in a way that allows space characters to be delimiters or to be parts of arguments. The single quote character delimits some or all arguments such that

arguments = '%s' 'argument with spaces' '+%d'results in

argv[1] = %s argv[2] = argument with spaces argv[3] = +%d

Should the arguments themselves contain the single quote character, an escaped double quote character may be used. The example

arguments = \"don't\" \"mess with\" \"quoting rules\"results in

argv[1] = don't argv[2] = mess with argv[3] = quoting rules

And, if the job arguments have both single and double quotes, the appearance of the quote character twice in a row is converted to a single instance of the character and the literal continues until the next solo quote character. For example

arguments = 'don''t yell \"blah!\"' '+%s'results in

argv[1] = don't yell "blah!" argv[2] = +%s

When you remove a job with condor_ rm, you may find that the job enters the ``X'' state for a very long time. This is normal: Condor-G is attempting to communicate with the remote Globus resource and ensure that the job has been properly cleaned up. If it takes too long or (in rare circumstances) is never removed, you can force the job to leave the job queue by using the -forcex option to condor_ rm. This will forcibly remove jobs that are in the X state without attempting to finish any cleanup at the remote resource.

Glidein is a mechanism by which one or more Grid resources (remote machines) temporarily join a local Condor pool. The program condor_ glidein is used to add a machine to a Condor pool. During the period of time when the added resource is part of the local pool, the resource is visible to users of the pool, but the resource is only available for use by the user that added the resource to the pool.

After glidein, the user may submit jobs for execution on the added resource the same way that all Condor jobs are submitted. To force a submitted job to run on the added resource, the submit description file contains a requirement that the job run specifically on the added resource.

The local Condor pool configuration file(s) must give HOSTALLOW_WRITE permission to every resource that will be added using condor_ glidein. Wildcards are permitted in this specification. For example, you can add every machine at cs.wisc.edu by adding *.cs.wisc.edu to the HOSTALLOW_WRITE list. Recall that you must run condor_ reconfig for configuration file changes to take effect.

Make sure that the Condor and Globus tools are in your PATH.

condor_ glidein first contacts the Globus resource and checks for the presence of the necessary configuration files and Condor executables. If the executables are not present for the machine architecture, operating system version, and Condor version required, a server running at UW is contacted to transfer the needed executables. You can also set up your own server for condor_ glidein to contact. To gain access to the server or learn how to set up your own server, send email to condor-admin@cs.wisc.edu.

When the files are correctly in place, Condor daemons are started. condor_ glidein does this by creating a submit description file for condor_ submit, which runs the condor_ master under the Globus universe. This implies that execution of the condor_ master is started on the Globus resource. The Condor daemons exit gracefully when no jobs run on the daemons for a configurable period of time. The default length of time is 20 minutes.

The Condor daemons on the Globus resource contact the local pool and attempt to join the pool. The START expression for the condor_ startd daemon requires that the username of the person running condor_ glidein matches the username of the jobs submitted through Condor.

After a short length of time, the Globus resource can be seen in the local Condor pool, as with this example.

% condor_status | grep denal 7591386@denal IRIX65 SGI Unclaimed Idle 3.700 24064 0+00:06:35

Once the Globus resource has been added to the local Condor pool with condor_ glidein, job(s) may be submitted. To force a job to run on the Globus resource, specify that Globus resource as a machine requirement in the submit description file. Here is an example from within the submit description file that forces submission to the Globus resource denali.mcs.anl.gov:

requirements = ( machine == "denali.mcs.anl.gov" ) \

&& FileSystemDomain != "" \

&& Arch != "" && OpSys != ""

This example requires that the job run only on denali.mcs.anl.gov,

and it prevents Condor from inserting the filesystem domain,

architecture, and operating system attributes as requirements

in the matchmaking process.

Condor must be told not to use the submission machine's

attributes in those cases

where the Globus resource's attributes

do not match the submission machine's attributes.

In it simplest usage, Condor-G allows users to specify the single grid site they wish to submit their job to. Often this is sufficient: perhaps a user knows exactly which grid site they wish to use, or a higher-level resource broker (such as the European Data Grid's resource broker) has decided which grid site should be used. But when users have a variety of sites to choose from and there is no other resource broker to make the decision, Condor-G can use matchmaking to decide which grid site a job should run on.

Please note that Condor-G's matchmaking ability is relatively new. Work is being done to improve it and make it easier to use. For now, please expect some rough edges.

Condor-G uses the same matchmaking mechanism that Condor uses: the condor_ collector and condor_ negotiator daemons, which are described in Section 3.1.2.

Two changes are required to use Condor-G's matchmaking. First, advertise grid sites that are available so that they are known and considered during the matchmaking process. This is accomplished by writing ClassAd attributes and using condor_ advertise to place the attributes into the ClassAd used in matchmaking. The second change is to the submit description file. This file needs to specify requirements that describe what type of grid site can be used, instead of identifying a specific grid site.

Each grid site that is available for matching purposes needs to be advertisted to the condor_ collector. Normally in Condor this is done with the condor_ startd daemon, and you do not normally need to be aware of the contents of this advertisement. Currently, there is no equivalent to the condor_ startd daemon for advertising grid sites, so you need have a deeper understanding.

To properly advertise a grid site, a ClassAd need to be sent periodically to the condor_ collector. A ClassAd is a list of attributes and values that describe a job, a machine, or a grid site. ClassAds are briefly described in Section 2.3 and some of the common attributes of machine ClassAds are described in Section 2.5.2.

When you advertise a grid site, it looks very similar to a ClassAd for a machine. In fact, the condor_ collector will believe it is a machine, but with a different set of attributes.

To advertise a grid site, you first need to describe the site in a file. Here is a sample ClassAd that describes a grid site:

# This is a comment MyType = "Machine" TargetType = "Job" Name = "Example1_Gatekeeper" gatekeeper_url = "grid.example.com/jobmanager" Requirements = CurMatches < 10 Rank = 0.000000 CurrentRank = 0.000000 WantAdRevaluate = True UpdateSequenceNumber = 4 CurMatches = 0

Let's look at each line:

# This is a comment

Your file can have comments that begin with the hash mark (#).

MyType = "Machine"

Your grid site is pretending to be a single machine, for the purpose of matchmaking. MyType is an attribute that the condor_ negotiator will expect to be a string. Strings must be surrounded by double-quote marks, as in this example. You may have surprising, unintuitive errors if they are not quoted. You will always want MyType to be ``Machine''.

TargetType = "Job"

This is an attribute that says the grid site (machine) wants to be matched with a job. Leave this as it is.

Name = "Example1_Gatekeeper"

You will want a unique name for each grid site. Any name is fine, as long as it is quoted.

gatekeeper_url = "grid.example.com/jobmanager"

This is the Globus gatekeeper contact string for your grid site. It is probably a machine name followed by a slash followed by the name of the jobmanager. If you have different job managers, you can only specify one per ClassAd.

UpdateSequenceNumber = 4

UpdateSequenceNumber is a positive number that must increase each time you advertise a grid site. Normally you advertise your grid site every five minutes. The condor_ collector will discard a grid site's ClassAd after 15 minutes if there have been no updates. A good number to set this to is the current time in seconds (the epoch, as given by the C time() function call), but if you are worried about your clock running backward, you can set it to whatever you like. If ClassAds are received with a sequence number older than the last ClassAd, they are ignored.

CurMatches = 0

This number is incremented each time a match is made for this grid site. Unlike a normal machine ClassAd that can only be matched against once, grid site advertisements can be matched against many time.

You will probably want to set this number to be the number of grid jobs that you have running on your site, and keep it updated each time you submit a new ClassAd. If you do not specify CurMatches, Condor will assume it is 0.

Condor will increment this number every time it makes a match against a grid site.

Requirements = CurMatches < 10

These are the requirements that the grid site insists must be trust before it will accept a job. These could refer to features of the job's ClassAd. In this case, we'll take any job, as long we have less than 10 matches currently. This will ensure that Condor-G will only run 10 jobs at your site--assuming that you keep CurMatches up to date when jobs finish. Of course, you can edit this statement to have different requirements. For example, if you want to accept all jobs, you can have ``Requirements = True''.

Rank = 0.000000 CurrentRank = 0.000000

This is a numerical ranking that will be assigned to a job. Right now it is not used, but should be set to 0.

WantAdRevaluate = True

The ``WantAdRevluate'' attribute is what distinguishes grid site ClassAds from normal machine ClassAds and allows multiple matches to be made against a single site. It should be in your ad and should be true. Note that True is not in quotes, and it should not be.

You can add other attributes to your ClassAd, to make it easy for a job to decide which grid site it wants to use. For instance, if you have pre-installed the Bamboozle software environment on your grid site, you could advertise, ``HaveBamboozle = True'' and ``BamboozleVersion = 10''. Jobs can require a grid site that has Bamboozle installed by extending their requirements with ``HaveBamboozle == True''. (Note the double equal sign in the requirements.)

As an aside, we recommend that jobs that need specific applications should bring them with them instead of relying on having them pre-installed at a Grid site. You will have more reliable execution if you do.

Once you have a file that describes your site, you need to send it to the condor_ collector. For this, you can use the condor_ advertise program. We recommend that you write a script to create the file containing the ClassAd, then run the script every five minutes with cron. The script should probably update the CurMatches variable if you want to restict the number of grid jobs that can be submitted at one time.

For condor_ advertise you will need to specify UPDATE_STARTD_AD for the update command. For example, if your ClassAd is specified in a file named ``grid-ad'' you would do:

condor_advertise UPDATE_STARTD_AD grid-ad

condor_ advertise usually uses UDP to transmit your ClassAd. In wide-area networks, this may be insufficient. You can use TCP by specifying the ``-tcp'' option.

Submitting a job to Condor-G that requires matchmaking is straightforward. Instead of specifying a particular scheduler with globussheduler like this:

globusscheduler = grid.example.com/jobmanager

you instead specify requirements and tell Condor-G where to find the gatekeeper URL in the grid site ClassAd:

globusscheduler = $$(gatekeeper_url) requirements = true

This will allow to run at any grid site, and will extract the gatekeeper_url attribute from the ClassAd. There is no magic meaning behind gatekeeper_url--you could use GatekeeperContactString if you desired, as long as it is the same in both the job description and the grid site ClassAd.

The requirements specified here are a bit simple. Perhaps you only want to run at a site that has the Bamboozle software installed, and the sites that have it installed specify ``HaveBamboozle = True'', as described above. A complete job description may look like this:

universe = globus executable = analyze_bamboozle_data output = aaa.$(Cluster).out error = aaa.$(Cluster).err log = aaa.log globusscheduler = $$(gatekeeper_url) requirements = HaveBamboozle == True leave_in_queue = jobstatus == 4 queue

What if a job fails to run at a grid site due to an error? It will be returned to the queue, and Condor will attempt to match it and re-run it at another site. Condor isn't very clever about avoiding sites that may be bad, but you can give it some assistance. Let's say that you want to avoid running at the last grid site you ran at. You could add this to your job description:

match_list_length = 1 Rank = TARGET.Name != LastMatchName0

This will prefer to run at a grid site that was not just tried, but it will allow the job to be run there if there is no other option.

When you specify match_list_length, you provide an integer N, and Condor will keep track of the last N matches. The oldest match will be LastMatchName0, and next oldest will be LastMachName1, and so on. (See the condor_ submit manual page for more details.) The Rank expression allows you to specify a numerical ranking for different matches. When combined with match_list_length, you can prefer to avoid sites that you have already run at.

In addition, condor_ submit has two options to help you control Condor-G job resubmissions and rematching. See globus_resubmit and globus_rematch in the condor_ submit manual page. These options are independent of match_list_length.

There are some new attributes that will be added to the Job ClassAd, and may be useful to you when you write your rank, requirements, globus_resubmit or globus_rematch option. Please refer to Section 2.5.2 and read about the following option:

If you are concerned about unknown or malicious grid sites reporting to your condor_ collector, you should use Condor's security options, documented in Section 3.7.